"Genius is ninety percent perspiration and ten percent inspiration." -- Thomas Edison

In August 2022, I joined Bosch as a senior MLOps engieer to drive some of their machine learning use-cases for automated parking and driving from the operational side of things. Doing so, me and my team are proving a machine learning platform basend on Kubernetes, Argo and AzureML. This state-of-the art tech stack enables development teams to validate models on a broad scale with minimal effort while reducing validation times significantly.

Before I working at Bosch, after a brief postdoctoral period at TU Freiberg, I joined LogMeIn as a senior software engineer to push some of their machine learning efforts in Dresden. In particular, the team was focussing on gaining insights into LMI's real-time communication infrastructure and improve its monitoring capabilities. Coming from the field of robotics I quickly realized that the underlying algorithmic requirements are remarkably similar. Essentially, users want to observe changes in the system and its surroundings as soon as they occur and react in a meaningful and situation-aware manner. This requires low-latency observation capabilities and more importantly a model of the expected behavior.

"If we knew what it was we were doing, it would not be called research, would it? -- Albert Einstein

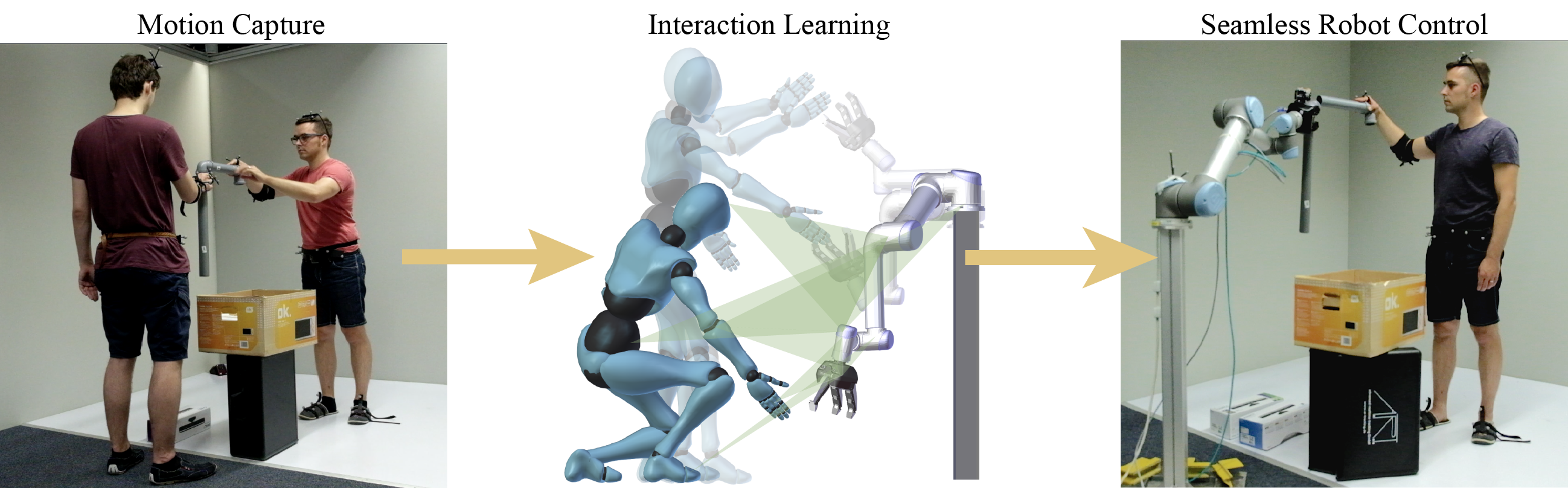

We address the problem of creating believable animations for virtual humans and humanoid robots that need to react to the body movements of a human interaction partner in real-time. Our data-driven approach uses prerecorded motion capture data of two interacting persons and performs motion adaptation during the live human-agent interaction. Extending the interaction mesh approach, our main contribution is a new scheme for efficient identification of motions in the prerecorded animation data that are similar to the live interaction. A global low-dimensional posture space serves to select the most similar interaction example, while local, more detail-rich posture spaces are used to identify poses closely matching the human motion. Using the interaction mesh of the selected motion example, an animation can then be synthesized that takes into account both spatial and temporal similarities between the prerecorded and live interactions.

At Blender Conf 2015 I was honored to talk about our human-character interaction framework. The presentation mainly focuses on extensions we made to Blender including motion capture addons for optical A.R.T. tracking systems and Kinects as well as Matlab bindings to compute character responses.

D. Vogt, Learning Continuous Human-Robot Interactions from Human-Human Demonstrations. Dissertation (summa cum laude), Freiberg, 2018. Bernhard-von-Cotta award for the best dissertation Link

D. Vogt, S. Stepputtis, B. Jung, H. Ben Amor, One-shot Learning of Human-Robot Handovers with Triadic Interaction Meshes. Autonomous Systems, Springer, 2018. DOI:10.1007/s10514-018-9699-4

C. Gäbert, D. Vogt and B. Jung. Interactive Planning and Validation of Robot Motions using Augmented Reality 14. Workshop Virtuelle und Erweiterte Realität der GI-Fachgruppe Virtuelle Realität und Augmented Reality. 2017.

D. Vogt, S. Stepputtis, S. Grehl, B. Jung, H. Ben Amor, A System for Learning human-robot interactions from human-human demonstrations. IEEE International Conference on Robotics and Automation (ICRA), 2017. DOI:10.1109/ICRA.2017.7989334.

D. Vogt, S. Stepputtis, B. Jung, H. Ben Amor, Learning human-robot interactions from human-human demonstrations (with applications in Lego rocket assembly). 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids). 2016. Best Video Award DOI: 10.1109/HUMANOIDS.2016.7803267.

D. Vogt, B. Lorenz, S. Grehl, B. Jung. Behavior Generation for Interactive Virtual Humans Using Context-Dependent Interaction Meshes and Automated Constraint Extraction. Journal of Computer Animation and Virtual Worlds (CAVW). 2015. DOI: 10.1002/cav.1648.

D. Vogt, S. Grehl, E. Berger, H. Ben Amor, B. Jung. A Data-Driven Method for Real-Time Character Animation in Human-Agent Interaction. Intelligent Virtual Agents,14th International Conference, IVA 2014, Boston, MA, USA, August 27-29, 2014. Proceedings, Lecture Notes in Computer Science, Vol. 8637, Springer, pp 463-476. DOI 10.1007/978-3-319-09767-1_57

D. Vogt, H. Ben Amor, E. Berger, B. Jung. Learning Two-Person Interaction Models for Responsive Synthetic Humanoids, Journal of Virtual Reality and Broadcastings, 11(2014), No 1, Link

H. Ben Amor, D. Vogt, M. Ewerton, E. Berger, B. Jung, J. Peters. Learning Responsive Robot Behavior by Imitation, Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013, pp. 3257 - 3264.

D. Vogt, E. Berger, H. Ben Amor and B. Jung. A Task-Space Two-Person Interaction Model for Human-Robot-Interaction. 10. Workshop Virtuelle und Erweiterte Realität der GI-Fachgruppe Virtuelle Realität und Augmented Reality. 2013. S. 77-84.

Z. Wang, M.P. Deisenroth, H. Ben Amor, D. Vogt, B. Schölkopf and J. Peters. Probabilistic Modeling of Human Movements for Intention Inference, RSS 2012 – Robotics: Science and Systems. 2012.

See all publications- See all videos GoogleSchoolar Profile- ResearchGate"Children see magic because the they look for it." -- Christopher Moore

contact at david-vogt.com